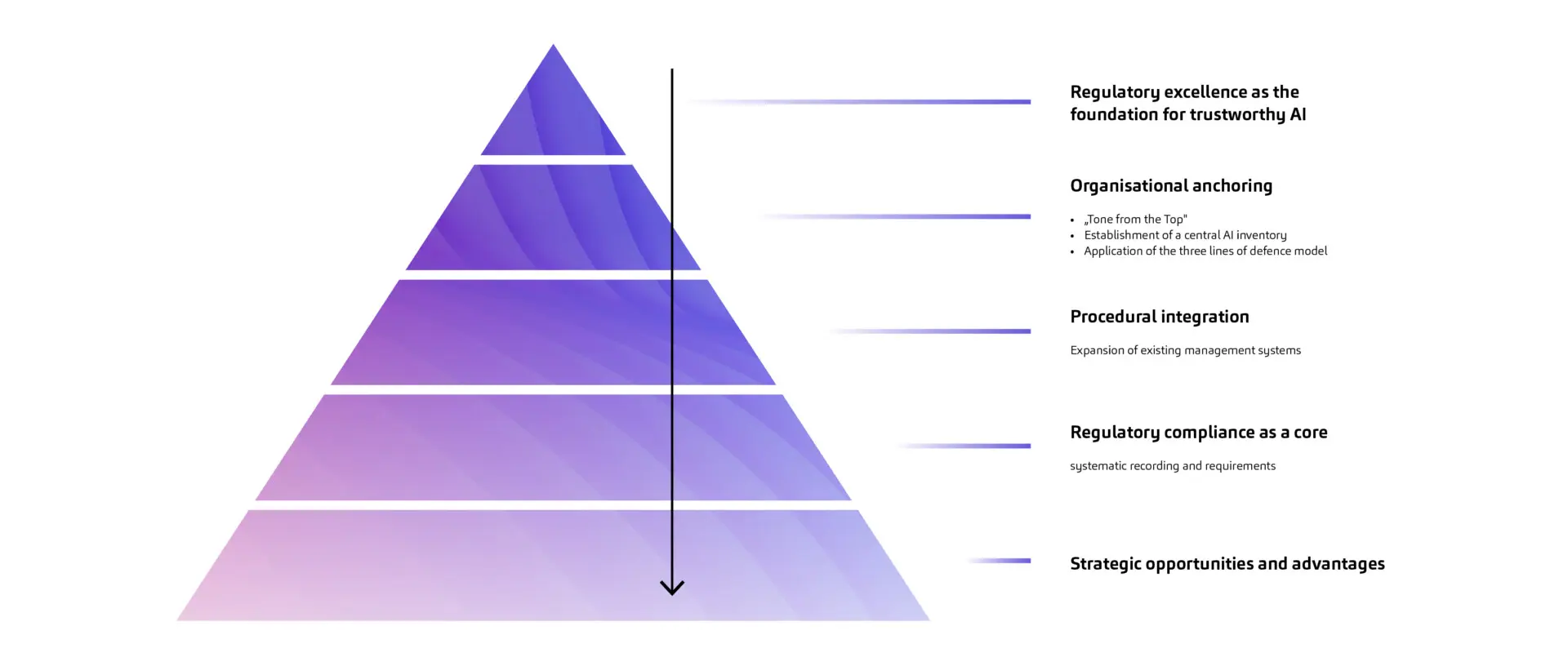

Top-down approach – AI governance as a mandatory programme

The financial sector is required to ensure the responsible, transparent and regulatory-compliant use of AI, particularly in light of the EU AI Act. Effective AI governance in banks promotes compliance with regulations through top-down roles, controls and an eye on European AI regulations. This is how you scale prototypes in an audit-proof manner – with inventory, the three lines of defence (3-LoD) model, model risk management (MRM) and traceable processes.

Why AI governance is the starting point

To successfully scale artificial intelligence (AI) in regulated organisations, it is not enough to develop innovative prototypes in the laboratory. Structured, audit-proof integration into existing processes is crucial. A clear top-down approach with defined roles, transparent processes and verifiable controls forms the basis for trustworthy and scalable AI – while also meeting the strict requirements of the EU AI Acts, especially for high-risk systems. Among other things, this requires robust risk management systems, high-quality and fair training data, technical traceability, human intervention options and comprehensive security measures.

Those who consider regulatory excellence from the outset not only minimise liability risks, but also build trust among employees, supervisory authorities, partners and customers. This means that AI becomes a strategic success factor rather than an experiment.

Best practices such as phased implementation, interdisciplinary governance teams and automated control mechanisms support this approach. Compliance with regulatory requirements is not an obstacle to innovation, but a catalyst for sustainable, resilient AI. In industries with high auditing and documentation requirements, such as banking, this approach is more than just recommended – it is the only option.

The consensus from regulation and specialist literature is clear: regulatory requirements and governance structures must precede operational deployment. The EU AI Act and industry-specific requirements therefore demand that risks be addressed as early as the design phase, before models are rolled out productively.

Tone from the top for the introduction of AI governance

A robust top-down approach begins with a firm organizational anchoring. Clear responsibilities at management level play a key role here – the principle of “tone from the top” is an integral part of relevant guidelines from BaFin, the European Central Bank and the EU AI Act.

In addition, a central AI inventory creates transparency regarding all applications, their risks, dependencies and areas of use. This overview forms the basis for effective controls, auditability and the obligation to provide evidence to supervisory authorities.

At the same time, the tried-and-tested three lines of defense (3-LoD) model is being extended to AI: The operational line is responsible for operations, risk and compliance functions monitor compliance and internal audit regularly reviews the entire governance.

Integrating processes instead of inventing them

In addition to organizational anchoring, successful implementation requires the procedural integration of AI into existing management systems. This means that governance, quality and risk structures must be expanded in such a way that seamless documentation, continuous validation and traceable decision-making processes are guaranteed. This also includes a targeted adaptation of the Model Risk Management (MRM): special processes for machine learning, model explainability and data governance ensure that uncertainties, model limits and possible distortions can be systematically identified and managed.

The core of the top-down approach is consistent regulatory compliance. This includes a systematic process for recording all relevant legal, technical and industry-specific requirements – from the EU AI Act and the General Data Protection Regulation (GDPR) to MaRisk and DORA.

A particular focus is placed on high-risk systems as defined by the EU AI Act, which are subject to strict requirements regarding data quality, transparency, human oversight and cyber security. Compliance with these requirements includes robust risk mitigation mechanisms, complete documentation, full auditability, explainable results, continuous monitoring and incident reporting. This ensures that highly critical applications can be operated not only in a technically reliable manner, but also in a way that is unassailable from a regulatory perspective.

Focus on the EU AI Act: risk-based management

The top-down approach to AI governance in regulated organizations results in specific opportunities and strategic advantages that go well beyond the minimum regulatory requirements. The consistent focus on legal certainty enables liability risks to be systematically minimized and regulatory requirements, such as those formulated in the EU AI Act, to be fully complied with. Companies that manage their AI systems on this basis gain the trust of supervisory authorities, customers and market partners – a clear competitive advantage in environments where transparency and traceability are crucial. The structured integration of AI into governance and control systems not only creates a resilient, audit-proof foundation, but also paves the way for proactive, efficient dialog with the supervisory authority, as verification standards can be met at all times.

However, this approach also brings challenges. Strict regulatory requirements can inhibit innovation processes, especially as the initial effort involved in setting up inventories, control mechanisms and adapting existing management systems, for example, is high. The commitment of resources – both human and financial – is considerable compared to less regulated approaches. There is also a risk that governance and compliance will be over-regulated “on paper” without creating practical added value for innovation.

Ultimately, however, the top-down approach is recommended wherever trust, audit compliance and legal certainty are not an option, but an obligation. Companies that use targeted awareness sessions and specific training on AI data security to sensitize employees to risks and strengthen their skills create the necessary acceptance for these structures. On this basis, AI can be used safely, effectively and sustainably in regulated industries.

You must login to post a comment.