Hybrid approach – speed and AI compliance in harmony

The hybrid approach ensures both speed and AI compliance. Combining a top-down framework with bottom-up innovation creates governance that follows an agile rhythm – secure, auditable and adaptable.

- Balancing AI compliance and innovation

- Case study: Hybrid implementation of a GenAI assistant in a development bank

- Phase 1: Initialisierung – Minimum Viable Governance & Proof of Concept

- Phase 2: Iteration 1 - MVP & governance refinement

- Result: speed and safety in harmony

- Opportunities and strategic advantages

- Challenges and risks

- Conclusion

Balancing AI compliance and innovation

In an increasingly dynamic AI world, where technological innovations are advancing rapidly and regulatory requirements are becoming stricter, many organizations are looking for a balanced approach that ensures both speed and security.

The hybrid approach offers exactly this middle ground: it combines the strategic control of the top-down model with the innovative power and practical relevance of the bottom-up approach. The result is a governance model that combines predictable risk with visible business benefits and can adapt flexibly to new technological, regulatory and market developments.

At the heart of this model is a clearly defined framework of roles and responsibilities. The company management – such as the board of directors or management – defines overarching values, risk principles and binding minimum controls that apply to all AI initiatives. These are typically based on requirements such as the EU AI Act, industry-specific standards such as ISO/IEC 42001 for AI management systems or the guidelines of the relevant supervisory authorities.

At the same time, the specialist departments contribute their use cases via a defined innovation pipeline – from the initial idea to the proof of concept (PoC) and the minimum viable product (MVP) through to scalable implementation. The interface between the strategic framework and operational implementation is formed by a Center of Excellence (CoE), which acts as a central hinge unit.

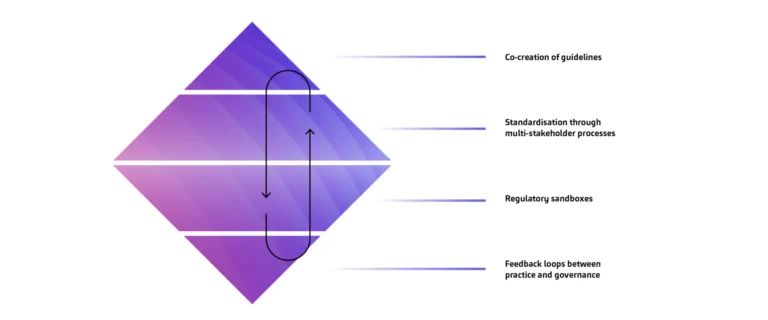

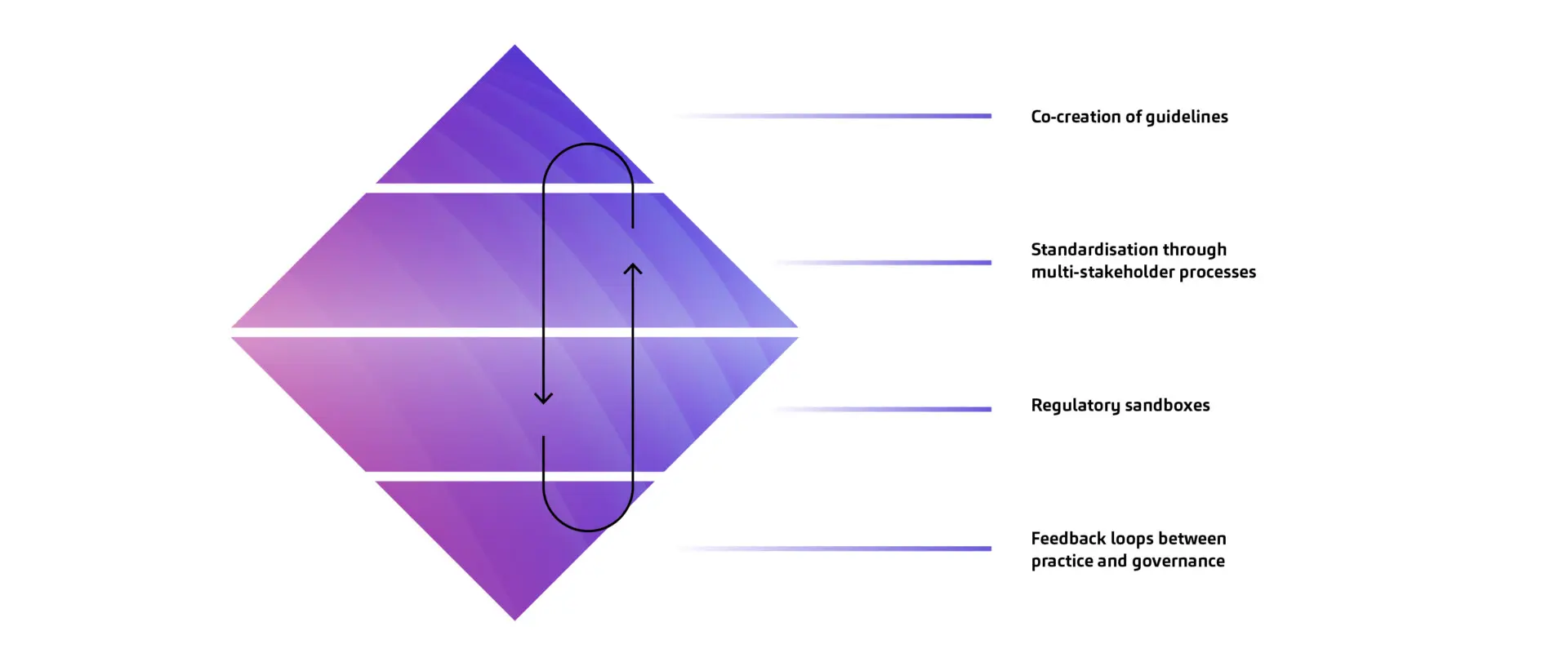

It curates methods, offers enablement programs, ensures quality and ensures that findings from projects are systematically fed back into standards, policies and training. A key element of the hybrid approach is minimum viable governance (MVG). It defines the smallest common governance basis that every AI project must fulfill in order to be compliant and controlled without slowing down innovation cycles. This includes a central AI inventory, a risk-based classification – for example according to “high risk” in accordance with the EU AI Act – as well as lightweight but auditable control points. Consistent reporting creates transparency regarding risk exposure, value contributions, incidents and maturity levels of all projects.

In addition, regulatory sandboxes and risk-based freedom allow experimentation with real data, processes and user groups within clearly defined boundaries. Technical telemetry, ongoing monitoring, human supervision, defined test periods and documented rollback plans are standard.

Governance in the hybrid model follows an agile rhythm: instead of rigid sets of rules that are only updated annually, checklists, threshold values and metrics are reviewed, evaluated and adjusted if necessary at fixed intervals – every six to eight weeks, for example. This keeps the organization agile without having to constantly renegotiate its basic principles.

Finally, cross-functional product teams (squads) ensure the development and implementation of AI applications in their respective areas, while the Center of Excellence, as the central competence authority, ensures that data standards, documentation formats such as model maps, evaluation frameworks, security policies and explainability requirements are uniformly understood and applied. This dual structure enables speed in breadth and consistency in depth – and makes the hybrid approach a powerful model for agile AI governance.

Case study: Hybrid implementation of a GenAI assistant in a development bank

The introduction of a Generative AI assistant in a development bank is an example of how a hybrid governance approach can be successfully implemented in practice. The project combined strategic governance with agile service development and relied on iterative development cycles to ensure both speed and security.

The aim was to provide a production-ready GenAI service in the shortest possible time and at the same time establish a resilient governance framework that grows with the requirements and risks.

Phase 1: Initialisierung – Minimal funktionsfähige Governance und Proof of Concept

The start of the project followed the principle of minimum viable governance (MVG). Even before technical development began, central governance components were defined – including responsibilities, risk classes, minimum controls and reporting formats. This top-down element ensured that the project was auditable from the outset and that minimum regulatory requirements such as data protection and model documentation were met.

At the same time, the specialist team developed the first functional version of the GenAI assistant as part of a proof of concept (PoC). The focus was on the rapid validation of central functions such as document search, semantic answer generation and user interaction. This dual approach prevented uncontrolled innovation and enabled early user feedback.

Phase 2: Iteration 1 - MVP & governance refinement

Based on the results of the PoC, a minimum viable product (MVP) was created, which was tested under real conditions with selected user groups. A structured feedback loop linked the practical findings of the users with targeted adjustments to governance.

At a strategic level, guidelines were refined – for example, for logging prompts, assessing the risk of generated responses and defining thresholds for human reviews (human-in-the-loop). At the same time, the specialist team optimized the service quality by fine-tuning the models, improving the retrieval pipelines and making adjustments to the user interface. This ensured that technical development and governance maturity were always synchronized.

Phase 3: Iteration 2 - Scaling & formal controls

In the second iteration, the focus was on scaling: the GenAI assistant was released to a broader user base and the operating environment was designed for higher loads and more diverse use cases. At the same time, the previously developed governance rules were translated into formal controls – including regular audits, automated monitoring dashboards and full integration into the company’s central AI inventory.

Top-down and bottom-up elements also ran in parallel during this phase: while the governance side implemented binding policies, standardized evaluation metrics and incident management processes, service development rolled out additional features that were requested by users in the feedback rounds.

Result: speed and security in harmony

The hybrid approach made it possible to bring the first use case into production as a robust and user-oriented GenAI assistant within just a few development cycles. Early defined MVG elements ensured compliance and technical traceability, while agile bottom-up service development continuously maximized business value.

The iterative governance cycles resulted in a living framework that evolved with the technology and its use – a prime example of how speed and security can be successfully combined in AI implementation.

Opportunities and strategic advantages

The hybrid approach offers significant added value through its balance of speed and security. The clear combination of a strategic top-down framework and agile bottom-up service development enables organizations to bring innovations to market quickly without neglecting regulatory obligations.

In addition, the close integration of both directions promotes an agile and responsible corporate culture. Teams experience that flexibility and compliance are not opposites, but can reinforce each other. This cultural change is a key factor for the sustainable acceptance of AI, especially in industries with high testing and verification requirements.

Another advantage is future viability and adaptability: iterative governance cycles ensure that rules, controls and methods can be continuously adapted to new regulatory, technological or business conditions. This ensures that the organization remains resilient even in the face of disruptive changes.

Finally, the approach enables the efficient use of resources: thanks to the principle of minimum viable governance, investments and attention flow to where they have the greatest impact – in risk-relevant projects and in direct value creation.

Challenges and risks

Despite its advantages, the hybrid approach is not without risks. Its strength – the simultaneous integration of two management logics – is also the source of its high level of complexity in management. The balance between clear guidelines and freedom must be permanently calibrated in order to neither stifle innovation nor allow gaps in control to arise.

The model also requires an intensive coordination effort: top-down governance, bottom-up development and center-of-excellence functions must work closely together. Without well-established communication and decision-making channels, the risk of duplication or contradictory decisions increases.

A particular risk is the danger of a “governance gap”: If governance adaptations do not iterate as quickly as technical development, unregulated areas can emerge at times – with potential legal, security and reputational consequences.

Conclusion

When implemented correctly, the hybrid approach combines the strengths of the top-down and bottom-up models to create sustainable, agile and secure AI governance. However, it requires discipline, coordination and a clear accountability structure to fully exploit its benefits and manage the risks.

For organizations that prioritize both speed and security, it offers a proven path to the scalable and future-proof use of AI.

You must login to post a comment.