2025: The first year of AI regulation in Europe – taking stock and looking ahead

The EU AI Act is the world's first comprehensive regulation governing artificial intelligence (AI). With its risk-based approach, it aims to make AI safe, trustworthy and compliant. The 2025 regulation marks a turning point for the European AI landscape: what the EU AI Act has already changed, where challenges remain and what developments companies can expect in the coming years.

- Chronological review of the year 2025 - What happened?

- Initial impact on practice, the economy and regulation

- Technical and legal construction sites - what remains to be done in 2025

- From 2026 to 2030: How AI regulation in Europe is evolving

- Conclusion: 2026 will be the decisive year for AI compliance

Chronological review of the year 2025 – What has happened?

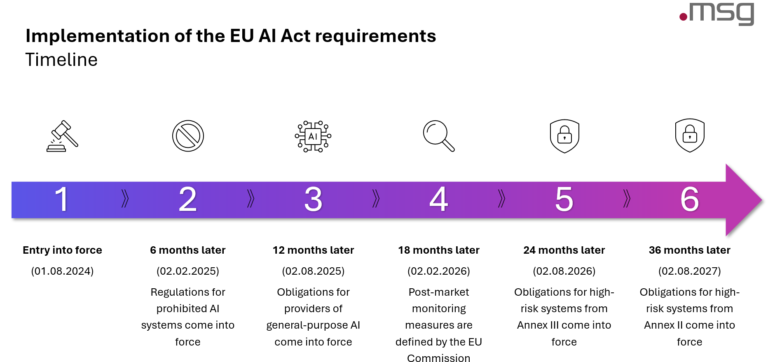

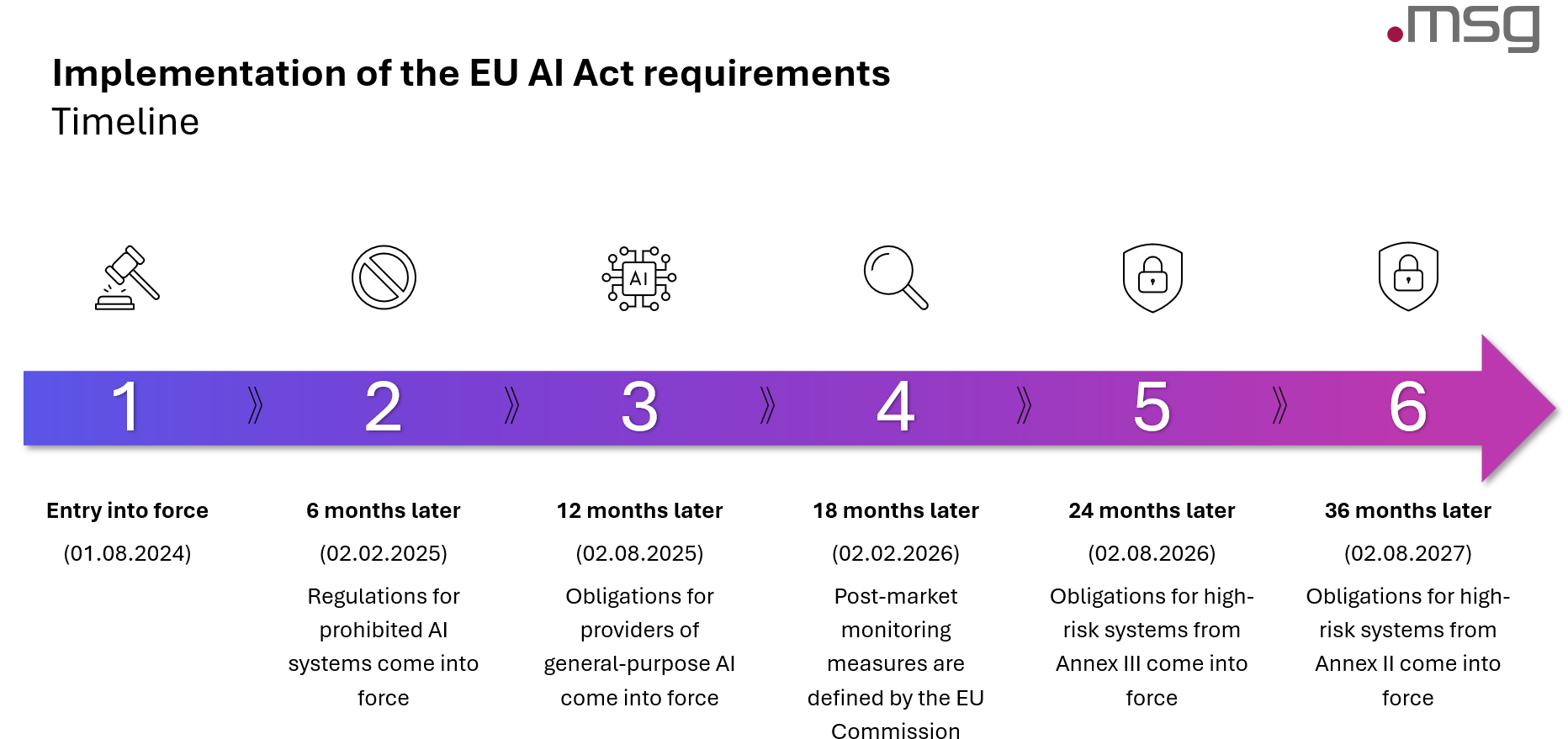

With the publication of the EU AI Act on 8 August 2024, the European Union has created the world’s first comprehensive and legally binding set of rules for the use of artificial intelligence (AI). As part of a comprehensive package of policy measures, the EU AI Act aims to strengthen the trustworthiness of the new and rapidly developing trend technology and to ensure security, fundamental rights and human-centred AI within the EU.

With the help of four risk categories (prohibited risk, high risk, low risk, minimal risk), the EU AI Act will require AI systems to take security measures in future and impose corresponding requirements on them.

The first regulations have already come into force this year. For example, the use of prohibited AI systems, such as systems for biometric facial recognition in real time, has been prohibited since 2 February 2025.

Companies have also been more responsible since 2 February 2025. Anyone using AI systems must offer employees qualified AI skills training. Article 4 of the EU AI Act clearly defines that providers and deployer must ensure that their employees have sufficient knowledge and skills in dealing with AI.

AI skills training in accordance with the EU AI ActMake your team fit for the future!

Our interactive training course provides you with thepractical knowledge you need to use AI systems inyour company in a legally compliant and efficient manner.

On 2 May 2025, the EU Commission published the so-called AI codes of conduct. They serve as practical guidelines on how providers of AI systems can voluntarily demonstrate that they comply with high standards of transparency, quality, risk management and security even before the law is fully applied. These codes are intended to support companies in establishing responsible AI governance at an early stage and implementing regulatory requirements in a predictable manner.

In August 2025, specific regulations for AI models with a general purpose, or general purpose artificial intelligence (GPAI), also came into force. Providers of large AI models, such as GPT from OpenAI, are now obliged to implement extensive transparency measures, assess risks and ensure compliance with copyrights. In the event of potential systemic risks, there is also an obligation to notify the competent European authorities. The aim of these measures is to increase the safety of powerful AI models and at the same time enable innovation.

At the same time, the EU has introduced a multi-level governance model. New bodies and contact points have been created at European level, such as the EU Office for Artificial Intelligence, which will take on a coordinating role in future. National supervisory structures have also been appointed in Germany: The Federal Network Agency (BNetzA) will take on the central tasks of market surveillance in future and enforce the requirements of the AI Act. Sector-related authorities, such as BaFin, will supplement this supervision in their respective sectors. Overall, this has already created a clearly defined system of responsibilities and tasks for the secure and legally compliant use of AI in Europe.

Initial effects on practice, the economy and regulation

The past two years have already seen a clear upward trend in the use of AI in the banking sector. Looking back to the year 2025 in particular, it is clear to what extent the use of AI technologies has now become established. Banks primarily emphasise the business benefits, which range from the acceleration of operational processes to more precise valuations and improved customer contact. This trend made it clear in 2025 that AI is no longer a vision of the future, but has found a permanent place in day-to-day business operations.

At the same time, institutions became more aware of the approaching regulatory deadlines when the regulation for banned AI systems came into force.

In addition to the implementation and introduction of new AI systems into the day-to-day business of banks, the topics of AI governance, compliance and risk management have also become increasingly critical for the sector.

Lisa Weinert Managerin | Artificial Intelligence | msg for banking

Many banks have therefore already started to establish AI governance in their existing structures and are working on various measures such as drafting special guidelines, developing responsibility concepts, creating new roles such as the “Chief AI Officer” and expanding existing control mechanisms, for example along the three lines of defence model.

In addition, initial attempts can already be seen to determine the risk categories of the AI systems developed and used through targeted self-assessment and thus identify potentially high-risk applications before the introduction of further regulatory requirements, for example through the EU AI Act on 2 August 2026.

In the banking sector, the categorisation as a high-risk system primarily concerns applications such as automated credit scoring. Providers and operators of such systems must implement extensive regulatory requirements, which include structured risk management, quality management and human supervision. These requirements require timely organisational and technical preparation.

The European supervisory authority is also sending a clear signal for the future: AI is high on the agenda as a key component of the digital transformation of the banking sector. At national level, BaFin in particular is monitoring the use of AI in the financial sector, for example for credit scoring, fraud detection or payment transactions, and will continue to specify its regulatory expectations in terms of governance, risk management, data security and internal controls. It can therefore be assumed that the topic will be intensively monitored and supported in the future. For banks, this means preparing for additional requirements and audits in the future. A forward-looking and comprehensive approach to AI governance, compliance and risk management therefore remains essential.

Regulatory clarity:AI in line with BAIT, EU AI Act, GDPR & Co.

In our AI Coffee Break on 30 January 2025,you will learn how you can seamlessly integrate AI into your existingrequirements management - and why regulation is not the realchallenge of a successful AI strategy.

Technical and legal issues – what remains unresolved in 2025

Although the EU AI Act has now been in force for just over a year and many of its provisions are already being applied, there are still unanswered questions and initial criticism of its practical feasibility.

One of the central problems is the very broad definition of AI systems, which makes it difficult for companies and authorities to clearly determine which AI systems are subject to the respective obligations of the regulation.

A further challenge arises from the rapid pace of technological development, which in some cases overtakes the legislative process and increases the risk of regulatory blind spots.

At the same time, there is a lack of concrete technical specifications and standards that can provide assistance in areas such as data governance, robustness, transparency or risk and quality management. These gaps lead to considerable uncertainty in terms of practical implementation and compliance.

In addition, the EU AI Act relies heavily on self-certification rather than independent controls of AI providers. This can lead to deficits in supervision, quality assurance and liability and further increases uncertainty on the part of companies.

These and other issues in the existing regulatory requirements leave open how the EU will deal with them in the future and what additional requirements companies may face in the coming years.

From 2026 to 2030: How AI regulation in Europe is evolving

In the future, companies in Europe will face a significant change in the regulatory approach to artificial intelligence. While the first provisions of the EU AI Act have already been implemented, a phase will now begin from 2026 in which central obligations will gradually become binding and the establishment of comprehensive AI governance in the EU will be driven forward.

Until 2026, the focus will primarily be on the specification of technical requirements, the establishment of national supervisory structures and the introduction of transparent notification and reporting obligations.

Hannah Gürsching Analyst | Artificial Intelligence | msg for banking

At the same time, providers of general-purpose AI will be prepared for stricter requirements, for example in terms of transparency, risk management and copyright compliance. The European Commission will also provide guidelines for practical implementation, in particular for the monitoring of AI systems once they have entered the market.

The EU AI Act will largely come into full force on 2 August 2026. From then on, high-risk systems in particular will have to fulfil all regulatory requirements. At the same time, all member states must establish so-called AI regulatory sandboxes in which companies can test new systems under official supervision.

Further obligations for AI systems that are already part of regulated products will follow from 2027. Providers of AI models that were already on the market before the regulation came into force must be fully compliant by then. For particularly complex or large-scale systems, transitional periods apply until the end of 2030.

These developments show that The EU AI Act will be with companies for many years to come and will require forward-looking planning, robust governance structures and the ability to adapt to ongoing regulatory developments.

Conclusion: 2026 will be the decisive year for AI compliance

A look at the upcoming regulatory milestones clearly shows that 2026 will be a key phase for companies in dealing with AI. After the technology has rapidly established itself in recent years and created significant business benefits, the priorities are now clearly shifting towards regulation, governance and risk management.

The requirements of the EU AI Act will enter their decisive phase in 2026: high-risk systems must be fully compliant from August, GPAI providers and users will face specific transparency and risk obligations and supervisory authorities will begin to actively exercise their new powers.

Companies must now master the balancing act between innovation and regulation. They should therefore firmly establish AI governance, assess risks at an early stage, strengthen documentation and competences and actively prepare for upcoming audits. Those who act proactively in 2026 will not only create regulatory certainty, but will also lay the foundations for the sustainable, responsible use of AI.

You must login to post a comment.